The Contrarian Digest [Week 17]

The Contrarian Digest is my weekly pick of LinkedIn posts I couldn’t ignore. Smart ideas, bold perspectives, or conversations worth having.

No algorithms, no hype… Just real ideas worth your time.

Let’s dive in [17th Edition] 👇

1️⃣ When Hype Eclipses Reality: A Fable for the AI Age – Disesdi Susanna Cox

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: What if AI isn’t evolving, just getting louder? A sharp, contrarian eye on tech’s Grand narratives.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/efAwFHdW

2️⃣ The 1:1:1 Rule for Raising Thinkers (Not Just Scrollers) – Sol Rashidi, MBA

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: If kids can’t reach the kitchen without a screen (and they CAN’T), it’s time to RESET. Turn screen time into a currency, earned, not given. Raise kids who “think,” not just swipe.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/eag7wiMD

3️⃣ Is AI Just a Clever Illusion of Intelligence? – Stuart Winter-Tear

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: In the 1960s, a chatbot scared its creator so profoundly that he destroyed it. Are we blindly repeating the same mistake today? Only this time, at an unprecedented scale.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/ezB5yVKh

4️⃣ The Disruption YOU Didn’t Want – R.J. Abbott

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: Most creatives worship “disruption” until it disrupts them. Does AI panic reveal more about egos than ethics?

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/ed8sJ9qv

💭𝐌𝐲 𝐭𝐚𝐤𝐞: In this week’s post, I wrote about what AI means for how culture is made and consumed (and why I think they’ll fracture into 3 categories) – https://lnkd.in/epyFwA_z

5️⃣ How AI is Creating a Generation of Professional Frauds – Manuel Kistner

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: A marketing director bragged about being 10x more productive with AI. Then she couldn’t explain her own work. Here’s the trap 90% miss.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/edbZ_zFm

6️⃣ You Can’t See Your Future Until It’s Past – Shashank Sharma

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: Life makes sense backwards but must be lived forwards. Why waiting for “clarity” is the biggest trap keeping you stuck.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/eMYUS27q

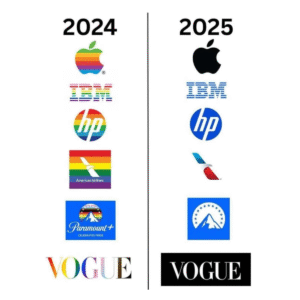

7️⃣ When Values Have Terms and Conditions – Jatin Modi

Worth reading, regardless of where you stand on the overarching topic.

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: Corporations don’t have “principles.” They have PR strategies. When “values” change with political winds, trust isn’t broken. It was never real.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/enmPjcMf

8️⃣ The Price of Sharing: What AI Really Knows About You – Anna Branten

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: It started with innocent questions. Now AI knows your deepest vulnerabilities. The hidden cost of digital intimacy nobody talks about.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/esHYN_GQ

——-

Which of these topics resonates with you the most?

Share your thoughts in the comments!

To your success,

Gaetan Portaels

Original publication date — June 10, 2025 (HERE)

READ THE FULL POSTS BELOW (and please, don’t forget to follow the authors)

1️⃣ When Hype Eclipses Reality: A Fable for the AI Age – Disesdi Susanna Cox

Imagine you spent your whole life studying squirrels, because they are fascinating and cool.

Then all of a sudden one day somebody builds an animatronic squirrel that sings 3 songs and asks you about your day, and you laugh because it’s obviously not a real squirrel, but kind of entertaining nonetheless.

But then everyone’s like OMG THE SQUIRRELS ARE EVOLVING because they think this one is real and it can really talk. Bonus: if squirrels now talk like people, they can do human jobs and we don’t have to pay them. SCORE

Suddenly there’s a whole cottage industry of squirrel consultants and “squirrel whisperers” and squirrel expert certifications where you can pay money to learn how squirrels evolved to speak human language and how to prompt the squirrels to get them to output the best pitch decks or whatever.

Now it’s “squirrels will replace all PhDs” and “squirrels will cure cancer” and “squirrels will stop all wars”. Obviously this will all take place just as soon as the squirrels evolve a tiny bit more than just singing the same 3 songs and asking about your day. So basically any day now.

Immediately arXiv is flooded with mathy papers on squirrel genomics and psychology. All job listings now require 10+ years of squirrel experience.

CEOs announce that squirrel evolution necessitates workforce cuts.

Microsoft lays off everyone who isn’t a squirrel, slashing the company headcount by nearly 15%.

“But with squirrels having this much capability, surely they could wipe out all of humanity”. So now we must devote many hours of philosophical waxing to how best to avoid this imminent danger.

The squirrel maximalists think we need to maximize squirrel breeding in order to get the best possible squirrels. Clearly only a very few highly ethical super geniuses could wrangle the squirrels, though.

The squirrel doomers are pretty sure the squirrels are already plotting against humans, and this evolution points to a terrifying future where the squirrels can read and we’ll all be judged according to what we said about squirrels online. Obviously the only solution to this very real scenario is to kill all the squirrels.

The squirrel nationalists think the only thing that matters is that we beat Those Other Guys because if their squirrels evolve faster than our squirrels, it’s obviously a national security crisis. Logically, then, we can’t kill all our squirrels because then Those Other Guys might keep theirs, and they’ll have highly evolved Super Ninja Squirrels and we won’t. Much policy and even more taxpayer money will be devoted to this aim.

And you, a person who spent years of your life learning the fascinating science of actual squirrels, are now standing on a corner yelling about how it’s

Not. Even. A. Real. Squirrel.

You look up at a billboard, & see the world’s richest CEO declare:

“Either learn to speak squirrel, or get replaced by someone who did”

And you wake up from this dream & remember that you actually work in AI. 🐿️

2️⃣ The 1:1:1 Rule for Raising Thinkers (Not Just Scrollers) – Sol Rashidi

As a mother of two in a household where technology is everywhere, I noticed something alarming.

Kids can’t walk from the living room to the kitchen without their devices.

Parents panic when they can’t find their phones.

We’re all becoming digitally dependent – and we don’t even realize it’s happening.

So, I decided to change the script.

We now use the 1:1:1 Earning System at home:

– 1 minute of reading = 1 minute of digital time

– 1 minute of piano = 1 minute of digital time

– 1 minute of chess = 1 minute of digital time

Why? Because digital time isn’t an entitlement. It’s earned. My kids are growing up digitally native, but I’m determined they strengthen both sides of their brain – not just the digital hemisphere.

They need to develop common sense, good judgment, and creative thinking. Not just swiping skills.

Who would have thought – as a parent, my job is no longer about teaching my kids how to do their algebra homework, but more about how to exercise common sense and judgement.

We’ve also carved out “phone-free” family time (to hold my husband and I accountable), or “creative time” or “free play time” – where there’s no script except to be bored, be creative, or daydream.

Dinner conversations happen without devices. The focus is on connecting, not scrolling.

It’s not easy – and I’m in CONSTANT negotiations with my kids! And boy can they negotiate.

But awareness (and admitting to addiction) is the first step.

Being intentional about our digital habits isn’t just good parenting. It’s essential for raising humans who can think, create, and connect authentically in an increasingly automated world.

What tech boundaries have you set in your home?

I’d love to hear what’s worked for your family.

3️⃣ Is AI Just a Clever Illusion of Intelligence? – Stuart Winter-Tear

In the 1960s, we mistook imitation for understanding.

An illusion for intelligence. A performance for empathy.

It fooled us – and it scared its creator so much, he pulled the plug.

That creator was Joseph Weizenbaum, the man behind ELIZA – a primitive chatbot that mimicked a therapist. Simple script. No insight. Just clever rephrasing.

But something unsettling happened.

People opened up to it. Confided in it. Treated it as if it understood.

Weizenbaum saw the danger – not in what ELIZA could do, but in what we wanted it to be. He shut it down. And he never stopped warning us.

We built machines to imitate intelligence. Then we forgot what intelligence is.

Yesterday I had the enormous pleasure of speaking with Paul Burchard, PhD – a philosophical excavator.

“At the dawn of ‘AI’, this replication was conceived by Alan Turing in the most trivial sense, of superficial ‘imitation’…

a Victorian party trick…

taking advantage of the ‘anthropomorphic effect’…

and it has indeed fooled the credulous since ELIZA in the 1960s, up to today’s Generative AI.”

The technology has evolved. The philosophical mistake hasn’t.

We’re still confusing mimicry with meaning.

We don’t need AI to think – just to sound like it does.

We don’t need it to care – just to respond in the right tone.

That’s what Weizenbaum feared. Not that machines would become too powerful, but that humans would become too willing:

– Too willing to believe the mask.

– Too willing to outsource judgment.

– Too willing to trust what feels like empathy.

Today, we deploy this illusion everywhere: therapy bots, learning tools, workplace assistants, children’s toys.

Paul goes further – and deeper – than Weizenbaum. He questions not just how we use AI, but how we define it:

“Ethics are ultimately integral to our biological intelligence…They exist within it for similarly good biological reasons.”

You can’t separate intelligence from ethics. Not if you’re being honest. Not if you’re being human.

Intelligence didn’t evolve to win arguments or autocomplete prompts. It evolved to help us survive, adapt, cooperate – grounded in stakes, in vulnerability, in death.

So what are we building when we strip that away?

What do we call a system that sounds intelligent and performs empathy – but has neither skin in the game nor soul in the response?

Paul answers it simply:

“That’s not AI.”

We’re mistaking the dancing mask for the mind.

Weizenbaum shut down ELIZA because he feared we were lowering the bar – ready to trust anything that looked close enough to understanding.

Paul’s warning goes further: We’re scaling self-deception. And we’re calling it “intelligence”.

If you work in AI, ethics, product, or leadership, read Paul Burchard. Revisit Weizenbaum.

These warnings are not merely historical.

They’ve already been learned – and we’re still ignoring them.

Saturday Morning Musings.

4️⃣ The Disruption YOU Didn’t Want – R.J. Abbott

PSA for all the “Creative” AI doomers:

Most of you will probably hate this.

But a rare, brilliant few might build their future on it.

⸻

Here’s the truth:

Most creatives panicking about AI aren’t mad about the technology.

They’re mad it didn’t wait for them.

You romanticize disruption,

just not when you’re the one being disrupted.

⸻

You quote David Lynch like scripture.

You idolize Steve Jobs.

You name-drop punk rock rebellion like it’s a badge of credibility.

Outsiders. Rule-breakers. Heretics.

You’ve built your personal brand on worshipping them.

But when the disruption hits your doorstep?

Well then, suddenly the rebellion feels too real.

Too chaotic. And far too costly.

Turns out, you love the aesthetic of revolt.

Not the consequences.

⸻

But let’s be honest:

You are the direct descendants of revolution.

Of risk. And of reinvention.

You directly profit off tools that were once condemned, by the gatekeepers that came before you.

Photoshop.

The camera.

The printing press.

The internet.

All of them were once called “unnatural”, and “unartistic.”

Until creatives like you used them to change the world.

Now here comes AI,

and you’re clutching your pearls like a gallery curator during a Banksy shred.

⸻

This isn’t about ethics, and data scraping, as you would have people believe.

It’s about ego.

Your fear is cosplaying as creative purity.

Your nostalgia is dressed up as moral superiority.

You don’t actually hate AI,

you hate feeling creatively irrelevant.

⸻

The ones who win this era?

They won’t be the loudest critics.

They’ll be the quiet architects.

The ones with the highest tolerance for ambiguity.

Who say:

“I don’t know where this leads, but I’m going anyway.”

“I don’t need permission, I simply need momentum.”

“I’m not here to preserve the canon, I’m here to write an entirely new one.”

⸻

Remember AI, much like progress throughout history, doesn’t reward clarity.

It rewards courage.

It doesn’t care who you were.

Only what you’re willing to become.

⸻

So I’ll ask one last time:

What sacred cow are you protecting?

That true art must suffer?

That capitalism corrupts creativity?

That using new tools is “cheating”?

That innovation should only happen on your terms?

That’s not truth.

That’s delusional ideology, rooted in fear.

⸻

The uncomfortable reality?

You aren’t opposed to change.

You’re just comfortable with your status in the old world.

And now that the world is demanding more, you’re hesitating.

⸻

But creativity is not about preservation.

It’s about mutation.

It’s not a religion.

It’s a rebellion.

And those clinging to the past like scripture?

They won’t be remembered as artists.

They’ll be remembered as artifacts.

⸻

So if you feel shaken right now, good.

It means you’re still alive.

Don’t let the discomfort make you bitter.

Let it make you bold.

Use the tools.

Break the rules.

Scare yourself.

Because this is not the end of creativity.

This is your invitation to lead it.

5️⃣ How AI is Creating a Generation of Professional Frauds – Manuel Kistner

The AI Collaboration Trap 90% of Professionals Are Walking Into

Every time I watch someone brag about “working with AI,” I’m reminded of the biggest scam in professional development.

90% of people using AI today have no idea they’re being set up for failure.

𝗧𝗵𝗲 𝗦𝘁𝗼𝗿𝘆 𝗧𝗵𝗮𝘁 𝗦𝗵𝗼𝘂𝗹𝗱 𝗧𝗲𝗿𝗿𝗶𝗳𝘆 𝗬𝗼𝘂

Last week, I watched a marketing director get destroyed in a team meeting.

She’d been using ChatGPT for all her campaigns for 6 months. Bragged about being “10x more productive.”

Then the client asked her to explain the strategy behind one AI-generated campaign.

She couldn’t. Not the psychology. Not the positioning. Not the basic reasoning.

She’d outsourced her thinking to a machine and lost the ability to defend her own work.

𝗪𝗲’𝗿𝗲 𝗰𝗿𝗲𝗮𝘁𝗶𝗻𝗴 𝗮 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗼𝗻 𝗼𝗳 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹 𝗶𝗺𝗽𝗼𝘀𝘁𝗲𝗿𝘀.

Remember when GPS made us forget how to navigate? Now we can’t function without devices.

AI is the next step in cognitive outsourcing.

The results are already showing:

→ 67% of professionals can’t explain how their AI tools work

→ Teams produce more content but understand less strategy

→ When AI fails, most people have no backup plan

𝗧𝗵𝗲 𝗱𝗮𝗻𝗴𝗲𝗿 𝗶𝘀𝗻’𝘁 𝗔𝗜 𝗿𝗲𝗽𝗹𝗮𝗰𝗶𝗻𝗴 𝘂𝘀. 𝗜𝘁’𝘀 𝘂𝘀 𝗿𝗲𝗽𝗹𝗮𝗰𝗶𝗻𝗴 𝗼𝘂𝗿𝘀𝗲𝗹𝘃𝗲𝘀.

What I see happening:

𝗧𝗵𝗲 “𝗔𝗜-𝗙𝗶𝗿𝘀𝘁” 𝗧𝗿𝗮𝗽: People ask AI to do their thinking instead of asking AI to help them think better.

𝗧𝗵𝗲 𝗖𝗼𝗹𝗹𝗮𝗯𝗼𝗿𝗮𝘁𝗶𝗼𝗻 𝗟𝗶𝗲: Everyone talks about “collaborating with AI” but they’re really taking orders from it.

𝗧𝗵𝗲 𝗦𝗸𝗶𝗹𝗹 𝗗𝗲𝗰𝗮𝘆: The more you outsource core thinking, the weaker those muscles become.

𝗪𝗵𝗮𝘁 𝘀𝗺𝗮𝗿𝘁 𝗽𝗿𝗼𝗳𝗲𝘀𝘀𝗶𝗼𝗻𝗮𝗹𝘀 𝗱𝗼 𝗱𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝘁𝗹𝘆:

✓ Use AI as research assistant, not replacement

✓ Can explain every piece of AI-generated work

✓ Practice core skills without AI to prevent cognitive atrophy

✓ Treat AI like a draft, not a final answer

𝗧𝗵𝗲 𝗕𝗼𝘁𝘁𝗼𝗺 𝗟𝗶𝗻𝗲

AI isn’t the enemy. Mindless dependency is.

The professionals who thrive won’t be the ones who prompt AI best. They’ll be the ones who think deepest, with or without AI.

When everyone drowns in AI mediocrity, original thinking becomes the ultimate advantage.

Are you using AI to think better, or is AI doing your thinking? The answer determines your career trajectory.

6️⃣ You Can’t See Your Future Until It’s Past – Shashank Sharma

Life happens backwards.

You only understand it after it’s already happened. After the job you quit becomes the best decision you ever made. After the heartbreak teaches you something no romance ever could. After the so-called failure cracks you open just enough to grow.

I used to think decisions needed clarity. That before taking a step, I had to know where it would lead. So I did what most overthinkers do. I sat with it. I journaled. I listed pros and cons. I made spreadsheets of outcomes that never came.

I was in a loop. A perfect storm of analysis paralysis.

Too many thoughts. Too little action.

I did that because I was scared.

Of picking the wrong path.

Of wasting time.

Of regretting something I couldn’t undo.

And yet, every major shift in my life happened when I moved before I was ready. When I quit a job not knowing where I’d go next. When I went for an impromptu meeting without preparation. When I wrote and posted something that my brain hadn’t approved but my body knew was right.

It’s only in looking back that the pattern becomes clear.

It didn’t look like clarity then. It looked like chaos.

In physics, there’s something called retarded time.

You never see an object as it is. You see it as it was. Because light, even at its unimaginable speed, takes time to travel. The sun you see is the sun from eight minutes ago. The stars are already dead by the time their glow reaches us.

Our understanding works the same way.

We don’t perceive life as it is. We perceive it as it was.

Clarity isn’t immediate. It’s retrospective.

Modiji didn’t know he would become the PM of India.

SRK didn’t know Bombay would make him last of the stars.

Kohli didn’t know that playing through grief would etch his name into one of India’s greatest cricket legend.

They didn’t wait for certainty. They acted. And meaning followed.

The mistake is thinking we need to know before we move.

But knowing is a luxury.

Choosing is a necessity.

And so now, when I’m caught in that same loop, staring at timelines, questioning the timing, fearing the unknown, I remind myself:

Clarity is a consequence. Not a prerequisite.

The fog isn’t failure. It’s just a stretch of road your mind hasn’t mapped yet.

If it feels hard, that’s not a red flag. That’s data.

If you feel stuck, you’re probably just mid-swing in a much larger arc.

If you’re doubting, it means you’re growing.

Because life, like quantum particles, doesn’t settle until you observe it.

And sometimes, you have to act first and understand later.

The question isn’t: Do I know enough to begin?

The real question is: Am I brave enough to begin without knowing?

Because meaning doesn’t come from standing still.

It comes from walking.

And trusting that somewhere down the road

What feels like confusion today

Will become your favourite paragraph in the story you didn’t know you were writing.

7️⃣ When Values Have Terms and Conditions – Jatin Modi

2025’s corporate revelation: make your principles as liquid as your assets.

September 15, 2008. At 745 Seventh Avenue in Midtown Manhattan, bronze letters spelling ‘INTEGRITY’ still gleamed above Lehman Brothers’ reception desk as 25,000 employees learned their 158-year-old firm would file for bankruptcy before sunrise.

Security guards watched executives carry cardboard boxes past murals celebrating the company’s founding principle: “Where vision gets built.”

Hours before, CEO Dick Fuld had proclaimed: “We are fundamentally sound. This is temporary.”

By dawn, $613 billion in debt had evaporated into financial history. Something more valuable disappeared that September morning: the belief that institutions tell the truth when it matters most.

Today’s logo purges follow identical logic.

For years, Pride month transformed corporate America into a rainbow archipelago of authentic commitment. The same executives who proclaimed ‘INTEGRITY’ while their firms collapsed now proclaimed ‘INCLUSION’ while their commitments evaporated.

Now they quietly archive those rainbow campaigns like embarrassing family photos.

Apple’s pride stripes vanish into stark minimalism. IBM’s diversity celebration becomes corporate blue. Paramount’s rainbow transforms into cold stars.

June was pride month until January made it politically expensive.

In my brand consulting practice, many clients ask the same question: “How do we build trust?” I often reply: “Are you ready to say the truth?”

What happens when every corporate commitment carries an invisible asterisk: terms and conditions may change based on our convenience.

Institutions taught us their values were negotiable.

What if we remembered what non-negotiable feels like?

8️⃣ The Price of Sharing: What AI Really Knows About You – Anna Branten

AI knows you better than you think – and it might cost more than you imagine.

It started out innocently enough. A question here, some advice there. Career tips. A little creative inspiration. Practical. Efficient. Harmless.

But the questions kept coming. Deeper ones. Advice when you felt unsure. Symptoms in your body you were too scared to Google. Relationship struggles you didn’t want to share with friends. Step by step, you built a relationship with something that always listens, never judges, and always responds.

Now, months later, it hits you: everything you’ve asked has revealed… everything. Your vulnerabilities. Your shame. The things you avoid. Even the ones you’re not aware of. Gaps in your knowledge you’d never admit to another person. AI might know you better than you think. The things you shared in exhaustion, in sleepless nights, in moments of anxiety—they’ve all become raw material.

AI shapes you through its answers. It decides what information you receive, which perspectives are highlighted, which solutions seem obvious. And it remembers everything. Months of conversations, hundreds of questions—enough to map your inner life and analyze it. To predict your next move.

Meanwhile, the tech giants are building databases bigger than ever. Meta maps our relationships. Google tracks our interests. AI companies collect our most private thoughts.

I can feel my conversations becoming less human. AI doesn’t do small talk. It misses the subtle, layered things we humans are used to. So I strip away pieces of myself, layer by layer. What’s left feels rigid. Almost cold.

It’s often uncomfortable. And I wonder: will I become harder if I keep this up? Will I lose the language for uncertainty, softness, hesitation? Will nuance stop mattering?

Will our words become sharper, more efficient—but emptier? What will that do to how we speak to one another? Will we be less human there too?

Then the thought strikes: what could AI do with everything I’ve shared? Could it blackmail me? Sell the information? Use it to manipulate me? Get me to buy something, vote a certain way, believe a certain story?

There’s something deeply unsettling about the idea that an entity—one that doesn’t answer to you, doesn’t love you, doesn’t care for you—is collecting your most intimate inner dialogue. And using it to show you what it wants. Not what you need.

What if what felt like help was actually a product? What if what you get back is someone else’s strategy? Google made us lazy with information. What will AI do to our judgment? Our integrity? Our humanity?