The Contrarian Digest [Week 24]

The Contrarian Digest is my weekly pick of LinkedIn posts I couldn’t ignore. Smart ideas, bold perspectives, and

No algorithms, no hype… Just real ideas worth your time.

Let’s dive in [24th Edition] 👇

Original publication date — August 4, 2025 (HERE)

1️⃣ Professionalized Incompetence – 💡Nuno Reis

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: What if most leadership, economics, and innovation frameworks are just elegant lies? The real crisis isn’t replication—it’s our blind faith in models that ignore the chaos of reality.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/euH9n-76

2️⃣ The Meaning Hidden in Resistance – Usman Sheikh

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: We’re obsessed with eliminating friction… Ease is everywhere, but so is emptiness. What if your friction wasn’t the problem, but the path?

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/eJ-JNuQa

3️⃣ The Feedback Trap – Shashank Sharma

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: When your days feel stuck on repeat, he problem might not be “discipline.” You could be trapped in a loop, with no new input. So what does it really take to break it?

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/ejTyBFT3

4️⃣ Why McKinsey Still Matters – Stuart Winter-Tear

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: AI can replace analysis, but not accountability. The real reason consultancies won’t die? They don’t sell answers—the emotional permission to fail safely.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/ebTZxkAk

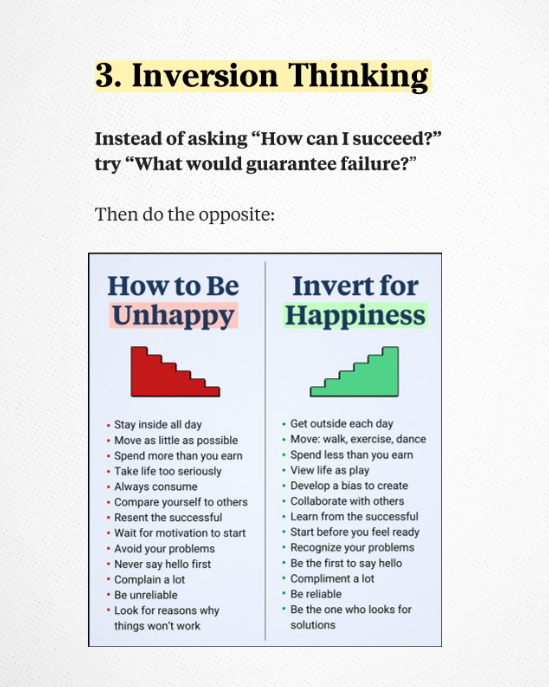

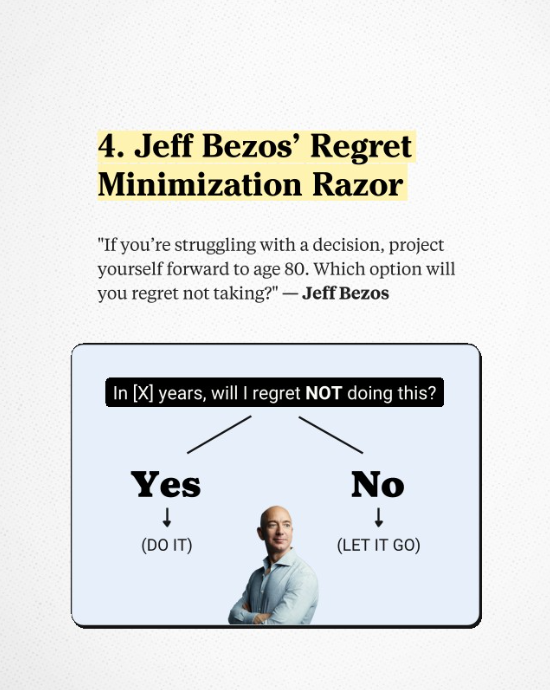

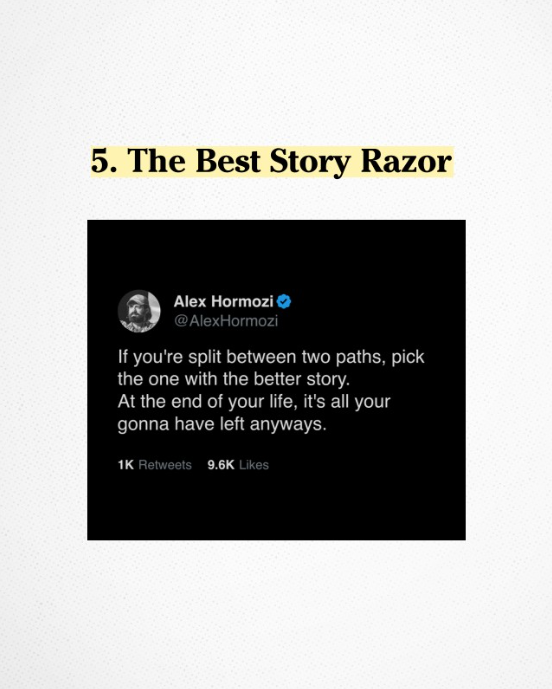

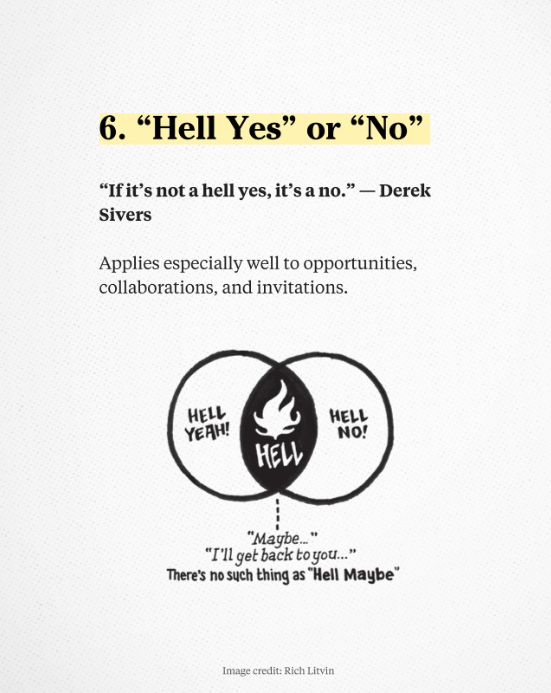

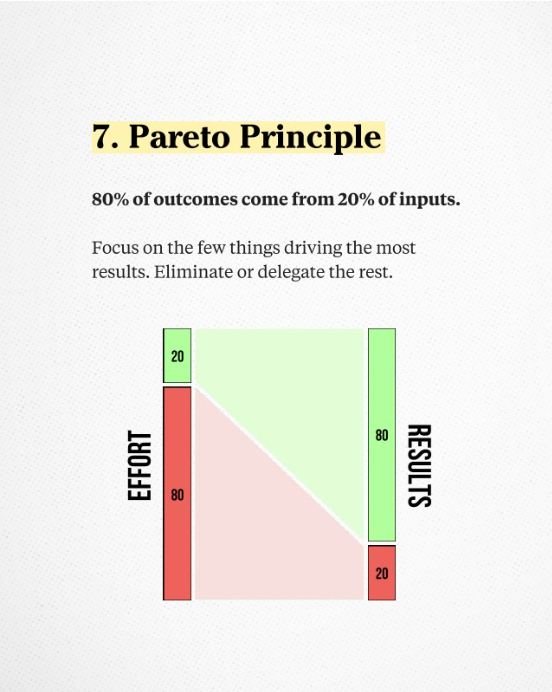

5️⃣ 8 Decision-Making Razors – Colby Kultgen

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: A “razor” is a rule of thumb for simplifying complex situations. Here are 8 powerful ones everyone should know… and use.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/e2ZqjrZg

6️⃣ Charging Cars, Burgers, Colonizing Mars: The Elon Playbook – Endrit Restelica

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: A diner that’s also a spaceship prototype. Genius or too much power for one man? Think beyond the burgers.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/e49m-eCN

7️⃣ When AI Talks, Courts Listen – Kunwar Raj

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: That ChatGPT convo you deleted? It’s still there. And now courts can subpoena it. Three scenarios proving why what you tell your AI buddy could cost you everything.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/ee5hWaPs

8️⃣ Gravity is for Planets, Not Markets – Jatin Modi

⚡𝐈𝐧 𝐛𝐫𝐢𝐞𝐟: Isaac Newton lost a fortune betting against human delusion. Today’s markets thrive on it. The most valuable asset isn’t profit, but the stories we’ll swallow.

🗞️𝐅𝐮𝐥𝐥 𝐩𝐨𝐬𝐭: https://lnkd.in/e9V-UFeg

——-

Which of these topics resonates with you the most?

Share your thoughts in the comments!

To your success,

Gaetan Portaels

READ THE FULL POSTS BELOW (and please, don’t forget to follow the authors)

1️⃣ Professionalized Incompetence – Nuno Reis

There’s a REPLICATION CRISIS no one talks about. Because if we did, 90% of the systems we rely on would COLLAPSE.

From leadership to economics to entrepreneurship,

people are being “fooled by transient clarity”.

>>The illusion that there’s a “right method” to lead, decide, or innovate—

when it all depends on CONTEXT.

And this obsession with ‘leadership models’, ‘economic models’ and ‘growth models’ share a common root:

‘Experts’ selling performative clarity.

A kind of PROFESSIONALISED INCOMPETENCE,

as philosopher Paul Feyerabend warned decades ago.

We’re sold frameworks built on abstract logic that ignore contradiction, context, and lived experience.

Where REAL MEANING emerges.

As Feyerabend claimed:

>>To truly understand how we understand the world,

we must think like ANTHROPOLOGISTS, not rationalists.

And for that, he was called an anarchist.

Dangerous.

Irrational.

But he was right.

And so is economist Tony Lawson.

Mainstream economics treats markets as CLOSED SYSTEMS—

Predictable. Modelable.

Like physics.

Lawson said: No.

Economies are OPEN SYSTEMS—

Messy. Emergent.

Like anthropology.

This isn’t about that old line:

“All models are wrong, but some are useful”

That still assumes models are the answer…

(only that we need to use with care)

Lawson’s view is deeper:

In economics, there is no guarantee of REPLICATION as in physics.

>>The ‘replication crisis’ is only a crisis to rationalists.

—

Which brings us to something we’ve been exploring in our Rare Dots Wed calls:

“AI Prompts for Liminal Thinkers”

Used well, AI doesn’t impose methods or models on us.

AI helps you develop your own method—

rooted in your reasoning, contradictions, and lived experience (anthropology).

No best practices.

No borrowed playbooks.

Just your way of MAKING SENSE.

2️⃣ The Meaning Hidden in Resistance – Usman Sheikh

Everyone wants friction to disappear.

Nobody asks what it was protecting.

My grandfather watched me struggle with driving lessons. “Any fool can press the accelerator,” he said. “The real skill is knowing when not to.”

I thought he was talking about speed limits. Many years later, I finally understood.

The constraints weren’t obstacles. They were the whole point.

I open this blank page every day. Stare at the cursor. Feel that familiar resistance. I have every resource to make this effortless.

But the moment writing becomes frictionless is the moment it stops mattering. It becomes words on the screen which may sound nice but don’t make us feel anything.

Why are we always searching for shortcuts?

We’re all chasing the same lie: If we just remove enough friction, we’ll finally arrive. Instant execution. Seamless everything.

The friction we face isn’t random.

When relationships become “easy,” it’s rarely because you’ve achieved harmony. More often, one or both sides have chosen indifference over engagement. The friction didn’t disappear. We stopped caring enough to feel it.

The same erosion happens everywhere we optimize for ease:

We automate the struggle, then wonder why nothing feels meaningful. The ten-thousand-hour mastery gets replaced by ten-minute hacks. But mastery was never about the outcome; it was about who you became through repetition.

We build systems to eliminate or automate every decision, every conflict, every moment of uncertainty. Then wonder why our teams lack judgment, why innovation dies, why everything feels scripted.

We accumulate resources to buy our way out of discomfort. Then discover that comfort without contrast is just numbness with a better view.

Too much friction paralyzes.

Too little friction hollows.

The art isn’t eliminating resistance or embracing all of it. It’s choosing your friction deliberately. Protecting the struggles that shape you while releasing the ones that drain you.

I write daily. I regularly question it – the time it takes, what it costs. But when I skip a day, something essential goes quiet. The friction isn’t a burden. It’s the price to verbalize some of the craziness happening all around us.

Your struggles aren’t simply obstacles to overcome. They’re telling you where meaning lives.

The alternative isn’t peace. It’s indifference dressed as success.

Everyone’s selling you frictionless futures. AI that removes every struggle. Wealth that eliminates every obstacle. Systems that optimize every inefficiency.

But notice who’s buying: The same leaders who’ve never felt more empty. The same founders who’ve never been more lost. The same high-achievers wondering why nothing satisfies anymore.

They removed all the friction.

And with it, all the feeling.

3️⃣ The Feedback Trap – Shashank Sharma

In 1948, Norbert Wiener introduced a new way of looking at the world. He called it cybernetics: the study of systems, feedback, and self-regulation. His insight was simple: systems don’t move in straight lines. They loop.

A thermostat measures the room temperature, adjusts the heater, then measures again. Each action becomes the input for the next. Correction follows correction. Order emerges not from control, but from continuous feedback.

But sometimes, systems get stuck. The loop tightens instead of adjusts. The feedback becomes noise. The thermostat keeps switching on and off because the signal is too sensitive. The body gets stuck in a stress response because it keeps interpreting normal signals as danger. The mind, too, begins looping on itself.

Some days feel exactly like that. You wake up already behind. You open your phone, searching for stimulation, and feel overwhelmed. You promise to reset with a walk, but the day takes over. You work without depth, half-present in every task. You go to bed uneasy, and the next day starts from the same unsettled place.

I am going through such days this week. Trying to break the loop. Hence, I must tell you this –

It’s easy to mistake this for failure. For lack of willpower. But in system language, it’s just a closed loop running without new information. The feedback has stopped being useful. The same inputs keep producing the same state.

What breaks the loop isn’t intensity. It’s interruption. A walk without a destination. A conversation where you listen instead of respond. Writing a page not to produce but to release. Even silence can be a signal. Not to fill the loop, but to let it breathe.

In physics and biology, loops aren’t bad. They’re how life learns. But they must remain open to new feedback, or else they harden into patterns that stop serving their purpose. The same is true for us. We can loop through days, emotions, and relationships without seeing that we’re no longer responding to the present but just echoing the past.

The task, then, is not to escape the loop. It’s to tune it. To feed it with signals that carry meaning, reflection, and rest. To notice when we’re spiraling through familiar reactions and gently insert something unfamiliar. Not drastic. Just enough to shift the curve.

The question isn’t: How do I power through days like these?

The deeper question is: What small shift will change what this loop reinforces?

Because systems don’t need saving.

They need sensing.

And sensing requires presence, not force.

One new signal is enough to reroute the rhythm of the day.

4️⃣ Why McKinsey Still Matters – Stuart Winter-Tear

McKinsey is dead ‘cos AI. Except they’re not.

Because the riskier the world gets for executives ‘cos AI, the more they need someone to lean on – not for insight, but for absolution.

And that is not a problem AI solves.

The people cheering for the downfall of the Big Four don’t understand what those firms are actually for. They imagine these institutions exist to deliver information, facts, and knowledge. But in a world of LLMs and infinite data, facts are cheap. Knowledge is abundant. What’s scarce is the emotional permission to act – and the insulation to survive if it goes wrong.

Ross Haleliuk put it well:

“Senior execs at large companies aren’t dumb… What they need is cover, a credible third-party to endorse a course of action so that if it fails, the board isn’t asking, ‘Why did you pursue this strategy?’”

This need is not going away.

It’s also why the idea that a chatbot can replace global consultancies is so naïve. This isn’t a knowledge problem. It’s a human one.

As Ross also noted:

“Too many people like to think that tech is the solution to everything, but unless they’ve taken classes in social sciences like psychology or spent time studying human behavior, they probably have a very oversimplified understanding of the world they live in.”

Exactly.

We’re not rational optimisers. We’re social, narrative-driven, blame-sensitive animals. We seek affiliation, fear shame, and avoid risk we can’t share.

That’s why decision-makers don’t just look for what’s right – they look for what can be defended. What bears the right logo. What spreads the risk. Leaning on a big consulting firm is social intelligence – a survival tactic in systems where being wrong alone is punished more harshly than being wrong together.

And that’s why those who think AI chatbots will replace the Big Four don’t just misunderstand the firms – they misunderstand humans. Strategy, in the real world, is as much about perception as substance. It’s not just a decision – it’s a performance. And performances need audiences, scripts, context, and blame management.

Strategy is a social act, not a solitary calculation.

And LLMs can’t play that game.

If AI can’t absorb fear, status, power, ego, or the need to be seen as “doing the right thing,” it won’t be the solution execs actually need. Because strategy isn’t just about knowing. It’s about navigating – ambiguity, power, risk, and reputation.

Until we stop looking at everything through a tech lens and start seeing the human operating system underneath, we’ll keep solving the wrong problem – optimising mechanics while ignoring meaning, generating answers in systems where the real challenge is permission, politics, and perception.

This is the core disconnect. It’s why so many takes on AI and the future of work are nonsense.

They’re not nonsense because the tech is wrong.

They’re nonsense because they forget the humans.

You can automate insight.

But you can’t automate absolution.

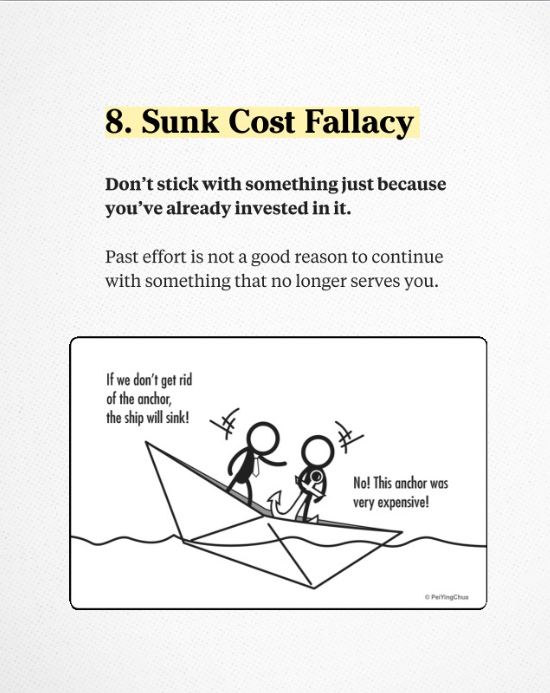

5️⃣ 8 Decision-Making Razors – Colby Kultgen

A “razor” is a rule of thumb for simplifying complex situations. Here are Colby Kultgen‘s 8 favorite ones.

6️⃣ Charging Cars, Burgers, Colonizing Mars: The Elon Playbook – Endrit Restelica

Tesla just opened a diner in Los Angeles.

24/7, 7001 Santa Monica Boulevard.

80 Superchargers, 2 massive LED movie screens, and a chrome diner that looks like it belongs in the future.

You can sit inside and order like a regular restaurant.

Or stay in your car, watch a movie, and have food delivered while your Tesla charges.

They’ve got staff on roller skates.

Optimus (the Tesla robot) serves popcorn.

And the burgers come in little Cybertruck-shaped boxes.

It doesn’t feel like just a diner.

It feels like we’re looking at the future being built right in front of us.

Like a prototype for a restaurant on Mars.

Elon already builds cars, trucks, batteries, robots, tunnels, satellites, rockets, and brain chips. Now he’s adding food to the list.

It’s like he’s building the full system (every part of life) ready to export to another planet.

No doubt the guy’s a genius.

But should one person really be in charge of everything from start to finish?

7️⃣ When AI Talks, Courts Listen – Kunwar Raj

breaking: your chatgpt history could be used in court against you!!

please stop telling chatgpt all your secrets.

this is serious sh*t, not some hypothetical threat.

“so if you go talk to chatgpt about your most sensitive stuff and then there’s like a lawsuit or whatever, we could be required to produce that.”

this is what sam altman said word to word.

let that sink in.

in may, a judge ordered open ai to retain all chatpt conversation logs indefinitely: including those deleted by users.

now imagine these 3 scenarios-

1. a husband and coldplay fan tells chatgpt about his hidden assets during a divorce, wife’s lawyer gets smart – subpoenaed the logs. he loses custody, credibility, and a large chunk of his savings.

2. a forbes 30u30 founder confessed to chatgpt about misusing company funds, when the board turns on him, the chat shows up. he’s out and likely under investigation.

3. an influencer therapist feeds client notes into chatgpt for help drafting a progress report. the client finds out, files a complaint – therapist loses license and their entire practice.

they were all doing something wrong but they trusted chatgpt like a friend, therapist and maybe even deleted the conversations but sam was watching and now the courts are too.

so think before you type.

and have a good day 🙂

8️⃣ Gravity is for Planets, Not Markets – Jatin Modi

Wall Street just discovered its most profitable product: stories that investors desperately want to believe.

In 1720, the South Sea Company held exclusive British rights to the Spanish American slave trade. Shares 10x’ed in six months. To justify the valuation, the company would need to monopolize all Atlantic trade for a thousand years.

Sir Isaac Newton calculated the impossibility and sold his shares for a tidy profit.

Then the price doubled again.

His niece Catherine Barton, London’s most celebrated hostess, brought tales from her salons: Dukes mortgaging ancestral estates, the Archbishop of Canterbury investing the Church’s pension fund, even the King’s mistress borrowing to buy more.

Newton bought back at the peak. Within months, the company collapsed, devastating fortunes across Britain. Newton himself lost £20,000, nearly ten years of his salary.

“I can calculate the motions of the heavenly bodies,” he later wrote, “but not the madness of people.”

Three centuries later, we’ve industrialized that madness.

Palantir trades at 660 times earnings. At this valuation, you’d need 660 years of current profits to recoup your investment. To reach a rational 15 PE ratio, they’d need to multiply earnings by 44x.

Transform $500 million into $22 billion. That’s more profit than Netflix, Adobe, and Salesforce generate today.

Tesla commands 192 times earnings. To justify this, they’d need to capture every dollar of profit from Toyota, Volkswagen, and GM combined.

‘We’re involved in Ukraine.’ ‘We stop terrorist attacks.’ ‘We do things we can’t discuss.’ Alex Karp prowls stages speaking of civilization’s battles. The mystery IS the product. Every redacted contract justifies another billion in market cap.

Tesla promises salvation through robots that don’t exist and robotaxis that operate in exactly one city. Musk tweets, stock soars. Reality becomes an inconvenient footnote to the narrative.

Today’s masters architect belief itself. Every earnings call becomes a sermon. Every product launch, a prophecy.

What the greatest scientific mind dismissed as human failure was actually human nature perfecting its most essential function: creating value through collective belief.

Gods became real when enough knelt. Money gained value because enough agreed worthless metal meant wealth.

No other species can gather around fiction and transform it into fact through faith alone.

But the market’s deepest teaching isn’t that humans are irrational.

It’s that irrationality, properly organized, is humanity’s most rational adaptation.

Newton lost his fortune learning that gravity governs matter, not meaning

The prophets earned billions knowing that in markets, meaning is the only gravity that matters.